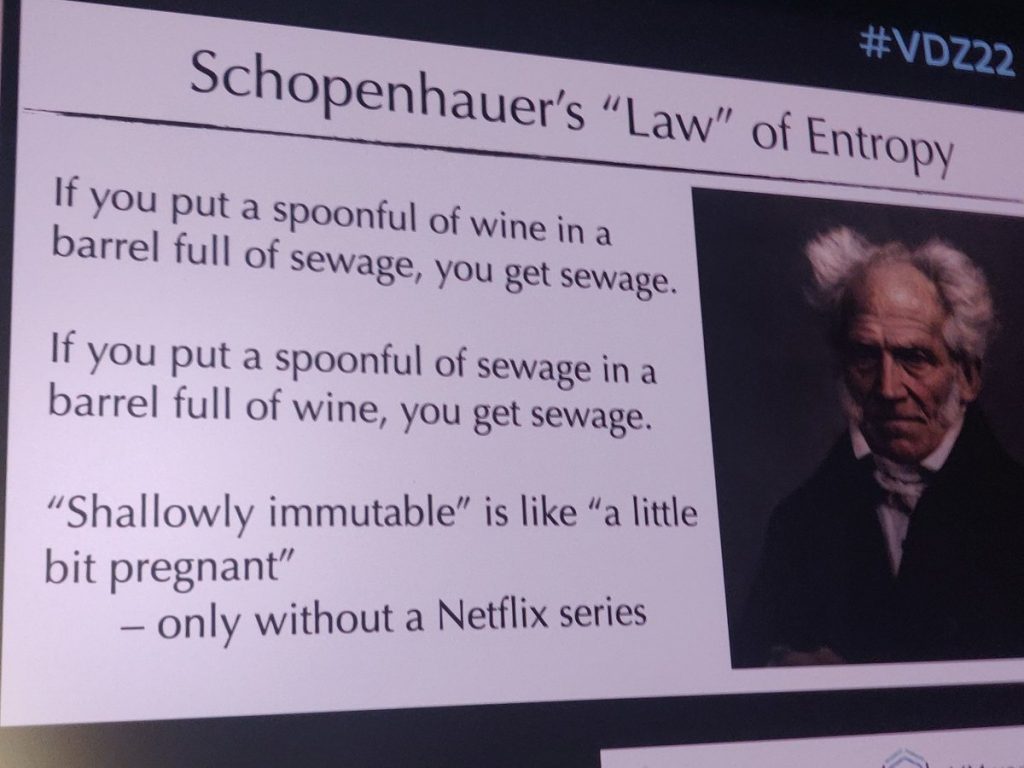

I gave a talk entitled “Change is the Only Constant” (video) at the excellent Voxxed Days conference in Zurich last month. It got a little attention on Twitter, particularly this slide, tweeted by Mario Fusco:

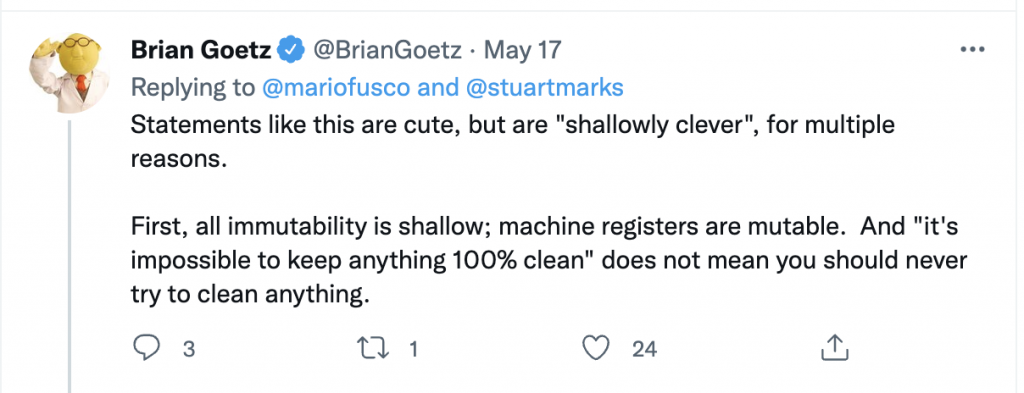

The point of the slide is that immutability is, strictly speaking, an all-or-nothing proposition: mutability anywhere in a data structure makes it mutable as a whole. “Schopenhauer’s” “Law” isn’t a law, isn’t about entropy, and seems to have absolutely no connection to Schopenhauer, but none of this harms its propagation as a meme across the Internet. I’m not myself wildly enthusiastic about the slide, but I put it in the talk because it’s a favourite of Stuart Marks, who is the primary maintainer of the Java Collections Framework and an infallible source of ideas and good advice to me. An unexpected payoff, though, was this response from Brian Goetz, who tweeted:

So I’m happy to take credit for the slide, because of course to be called any kind of clever by Brian is a compliment, however backhanded, to be treasured. Now at least the problem of knowing what to have written on my tombstone is definitively solved!

More seriously, Brian’s point is obviously fundamentally correct. It’s always been extremely difficult to be certain that you’ve got anything 100% right in computing. This reminds me of the argument in the formal verification community—or rather, the argument used against the verification community—that if the correctness proof of your program depends on the correctness of a verification system that itself hasn’t actually been verified, your argument is built on (unreinforced) sand. And if you do prove the correctness of your verifier, that proof will depend on the correctness of the layer beneath it —and so on, all the way down to the hardware which, as we know from the disasters of Spectre and Meltdown, is nowadays just too complex to analyse completely. The same argument, that we’re ultimately dependent on the hardware properties, applies to immutability.

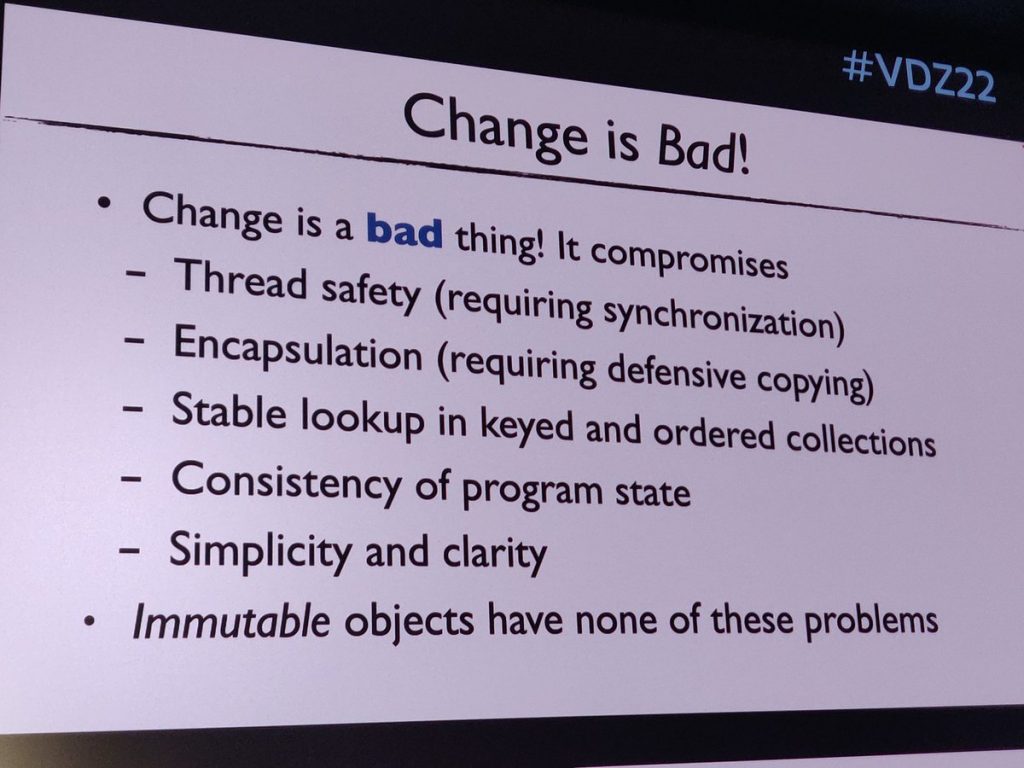

And to be fair to the talk, the slide about “Schopenhauer’s Law” was taken out of context: the rest of the talk did lay emphasis on the importance of reducing mutability. It’s worth looking at the value of this in a little more detail. Mario also tweeted this slide, on the problems of mutability:

:

Before looking at this in detail, we should dispose of a question of terminology that often gets in the way of discussions of immutability: are we discussing individual objects, or object graphs? This is a real problem in talking about collections, because preventing change to the state of a collection object in itself won’t necessarily prevent the ill effects of mutability listed in the slide. That’s because their impact depends on the properties of the entire object graph, and the immutability of the collection object is an entirely separate question from the mutability of the objects that it contains. We might reduce confusion by reserving the term “immutable” to object graphs, abandoning the notion of “shallow” immutability even if that would involve losing the source of jokes like Brian’s. This was the thinking that led to the label “unmodifiable” being given to the collections implementations introduced in Java 9. Here we’ll say that the opposite of “mutable” for an object is “unmodifiable”; for an object graph it is “immutable”.

Returning to Brian’s objection, are the problems of the second slide really reduced by reducing mutability? Consider an object graph, all but one of whose elements are unmodifiable:

- This structure as a whole will not be thread-safe, but protecting only the single mutable element is all that is required to make it so.

- Defensive copying will still be required, but that can again be restricted to the single mutable element.

- The question of stable lookup in keyed and ordered collections depends on the definitions of equality or comparison: if these relations can be made independent of the state of the mutable element, then decreasing mutability will have paid off.

- Consistency of program state refers to invariants that hold between object graphs, rather than within them as object-oriented principles demand. For instance, systems supporting user interfaces must ensure that the state of the underlying system is consistently mirrored in the interface, for example by disabling currently inapplicable UI elements. If the mutable element forms part of such an invariant, then extra care has to be taken whenever it is changed to ensure the invariant is maintained by corresponding adjustments of its other component(s).

- The gains in simplicity and clarity are proportional to the number of unmodifiable elements in the graph: each of these has only a single possible state, and is accordingly that much simpler to reason about.

This discussion may help to answer a popular question about the Java 9 unmodifiable collections: Why have them? Clearly, reducing mutability does bring gains, even if immutability in its perfect form is an ideal we can never reach.

I would call the result a draw: Goetz 1, Schopenhauer 1. And, as a verdict, how shallow is that?